[ad_1]

In light of rapidly evolving customer expectations and massive growth in demand for the digital communications and collaboration services Microsoft Teams offers, we’ve learned a lot over the past two years about what it takes to deliver a high-performance experience with Teams. In fact, we’ve built out our product roadmap and have already made several enhancements designed to ensure Teams delivers the best performance possible.

The best performance entails ensuring efficient use of device resources, such as CPU and memory, the elasticity to provide a quality experience across a range of device types, network speeds and variability in connectivity. In this blog, we’re providing an overview of our approach to enhancing Teams’ performance, including for fundamentals, and sharing with you our continuous improvements to the performance and user experience with Teams. Here are some of the ways we’re doing that:

Setting performance goals

We have aggressive, centrally documented targets for application responsiveness, latency, memory, power, and disk footprint. We tend to focus on edge case metrics that may be a result of very low-end devices as well as the average experience that users experience, as measured on the user’s device, to best represent the user experience across different scenarios. Those target goals are then applied to the development of features. Factors that influence these scenarios and targets include listening to customer and partner feedback, the frequency with which the scenario occurs, and the expected impact that changes will have on our users.

Analyzing performance with advanced reporting and insights

To help us intensively analyze the performance metrics, extensive instrumentation is added to the clients. Metrics are captured first in a lab and then gradually with a small set of users as we deploy to validate our changes. Once the metrics meet their targets, we expand to more users. Dashboards with different pivots are in place to provide visualizations to observe trends, identify improvement opportunities, and validate the impact of changes.

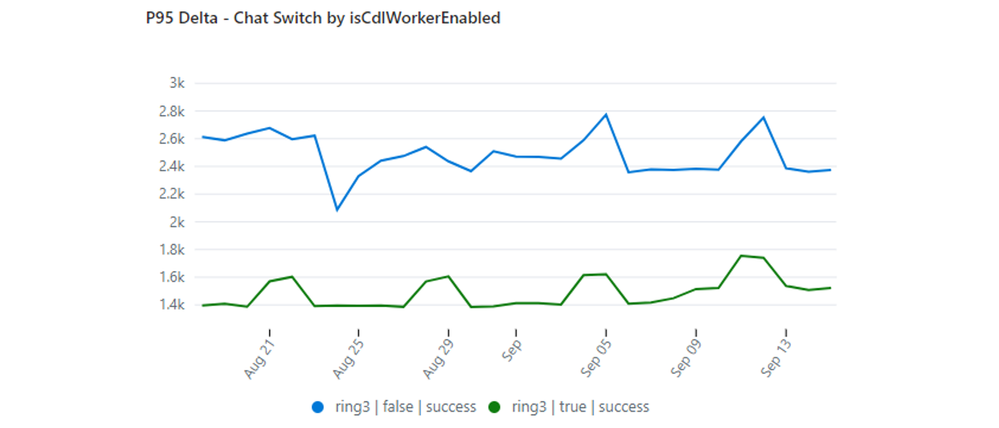

Performance improvements are typically rolled out to just a portion of the user base first, allowing us to experiment and compare metrics against the existing code used by other users. Below is an example chart generated from an A/B test as we moved data processing to a separate thread. This experiment provided real-world data to validate impacts and provide justification that we should roll out the change to the full user base.

Focusing on performance while innovating

As Microsoft Teams developers introduce features, we’re closely focused on avoiding any regression of our core metrics. A combination of techniques is applied, including the use of reporting, experimentation, and gates. The gates are a set of automated tests that measure performance characteristics in a controlled environment. The performance gates cover latency, application responsiveness, memory, and disk footprint. If a threshold is breached, a live-site incident is created and assigned to the relevant team to mitigate before team members are allowed to advance the feature to the next ring.

Below is an example dashboard showing a gate for the memory that the search component consumes when Microsoft Teams is launched. Each dot represents a new change that a developer has checked in to the code base, triggering the automated test to run and collect the metric. You can see that a change made on Nov. 11 reduced the memory at startup from about 31 KB to around 30 KB. In other examples, we will see changes have a negative impact on the metric, which means we would block the specific features from progressing until a fix is in place. Gates like this are configured for a variety of scenarios, and we continue to expand on the coverage we have.

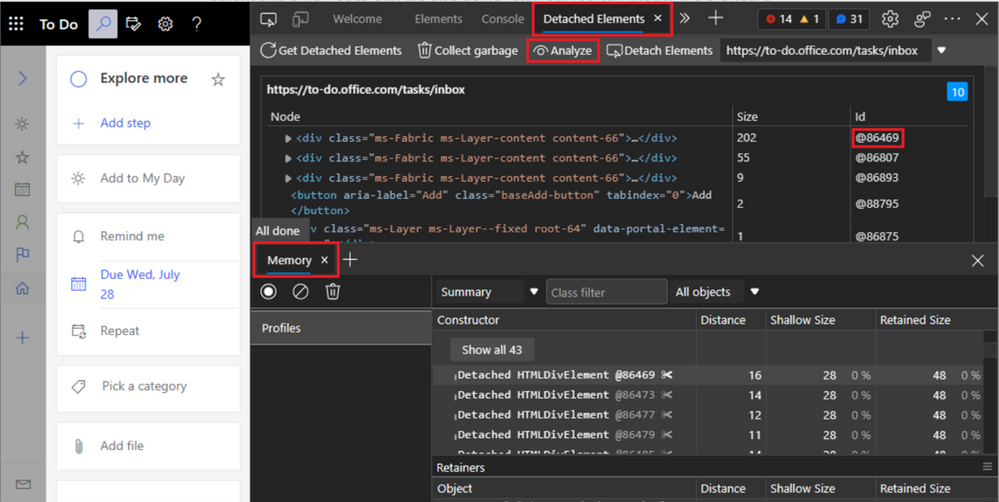

Creating tools to benefit Teams and other products

The performance team also invests in a collection of artifacts (dashboards, debugging and monitoring tools) that enables the organization to identify strategic fixes to meet our goals quickly in a scalable and repeatable manner. One of the tools was designed to find and fix memory leaks in Teams and display them in a simple visualization. This tool was shared with the Microsoft Edge team, which added it to the Microsoft Edge DevTools under the name of Detached Elements. While our charter is focused on Microsoft Teams improvements, it is rewarding when our efforts can benefit other developers around the world.

Investing in strategic improvements

A massive amount of our work is focused on including targeted fixes with incremental gains, as well as more structural architecture changes that are intended to achieve a step function improvement in the metrics. Prioritization of these investments factors in the expected impact, the occurrence of the scenario, the confidence of the fix, and the effort to achieve targeted results. When it comes to major architectural changes, we often start with a proof of concept to validate the hypothesis. Our intent is to have continuous improvements landing every quarter and to invest in going beyond technical constraints of the existing architecture.

A recent strategic investment by our team aimed to make the initial load of the compose box faster to speed up the time it takes for a user to compose a message. Analysis discovered that the client was loading non-visible components along with everything else, and it was making unnecessary renders. The fix was applied by prioritizing the compose box to be interactive before loading all other components, hooks, and extensions. This relatively small optimization achieved a significant impact to this metric (see chart below) and highlights the need for us to seek out both short-term and long-term improvements.

Advancing a performance-first culture

As the saying goes, “Culture eats strategy for breakfast,” and this certainly applies to ensuring the Microsoft Teams team is prioritizing performance. Our culture is possibly the most impactful tool to ensure our organization of designers, product managers, and engineers is building and executing with a performance-first mindset across all our features and commitments.

Shipping a feature that meets a functional need and creates a great user experience is not good enough. It also must meet the bar for the fundamental promises we make across performance, reliability, security, privacy, compliance, accessibility, manageability, scale, and operational efficiency. Driven by dedicated people on the performance team, shared priorities in listening to customers, planning, communications, and training, we are committed to addressing the needs and expectations of our users.

We are happy to hear more from you in this domain to learn and continue to improve, and we intend to publish additional blogs for key topics called out in this overview blog. Please share feedback and upvote your performance requests in the Teams Feedback portal.

Source link