[ad_1]

Video and screen sharing are fundamental methods of delivering your message with efficiency and impact. We’re focused on optimizing these capabilities to deliver quality, dependable, and resilient experiences regardless of content type or network constraints.

Previously, we’ve shared how Microsoft uses AI to reduce background noise and improve the encoding of audio via our Satin codec so that it is more resilient in poor networks. This blog explains how we extend the use of AI to improve video and screen sharing quality for meetings leading to improved quality and reliability even in environments with network constraints.

While most organizations’ network infrastructure has improved greatly over the last few years, remote work guidance during COVID-19 has led to a surge in real time conferencing and collaboration from home networks. Such networks often have two key constraints:

- Constrained network bandwidth: Many scenarios such as multiple people using the same Wi-Fi network access point, or the internet service provider being overloaded in certain regions, require ongoing adjustments due to fluctuations in available bandwidth.

- Network loss: Having a poor connection to your Wi-Fi or mobile network access point or network congestion by other apps running on your device can cause video data to not be transmitted successfully, leading to a degradation in video quality. In many cases the network loss isn’t randomly distributed, but occurs in bursts, which can further exacerbate the issue.

Constrained network bandwidth and network loss might lead to frozen video, low frame rates, or poor picture quality. Let’s explore how Teams innovations address constraints that can impact video quality during meetings.

Teams uses AI to improve video quality in constrained bandwidth scenarios

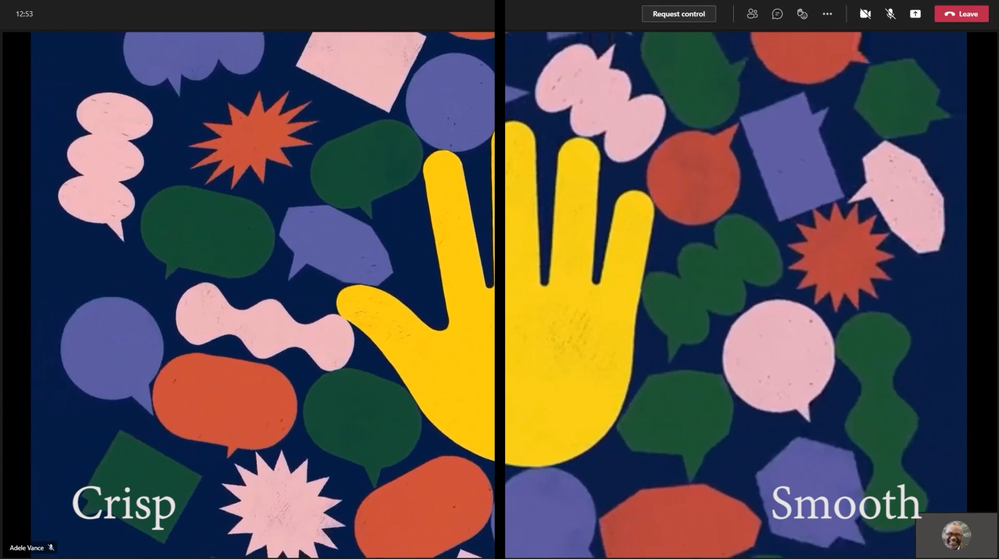

In situations where not enough bandwidth is available for the highest quality video, the encoder must make a trade-off between better picture quality versus smoother frame rate. To optimize the user experience, this trade-off must consider aspects of the source content we are trying to encode. Sharing screen content such as documents with small fonts requires optimizing the spatial resolution for the document to remain legible. Alternatively, if the user shares their desktop while playing a video, it’s important to optimize for a high frame rate, otherwise the shared video will not look smooth. Since shared screen content is often a mix of both scenarios it’s challenging whether to prioritize high spatial quality or smooth motion. Some apps ask the end user to make that choice by exposing a setting for this, which can be a cumbersome and potentially confusing step.

To make it easier for the end user, Teams uses machine learning to understand the characteristics of the content the user is sharing to ensure participants experience the highest video quality in constrained bandwidth scenarios. If we detect static content such as documents or slide decks, we optimize for readability. If motion is detected, then we instead optimize for a smooth playback experience. This detection runs continuously on the user’s Teams client so that we can switch easily back and forth. To ensure that this experience is great across devices, the classifier is optimized for hardware offloading and is able to run real-time with minimal impact on CPU and GPU usage. In the video below you can see how this optimizes the end user experience.

In addition, when users share content with their webcam enabled, Teams balances requirements for video and shared content by adaptively allocating bandwidth based on the needs of each content type. We have recently improved this capability by using machine learning to train models using different network characteristics and source content. This enables Teams to transmit video at higher quality and frame rate while maintaining the screensharing experience, all while utilizing the same bandwidth.

How do we improve quality during network loss?

Teams uses three complementary mechanisms to protect against network loss:

- Forward error correction (FEC): We intentionally introduce packet redundancy to be able to reconstruct packets which have been lost. Since this process can take away bandwidth that could be used to improve the encoding of the source content, we continuously analyze the network quality to determine how much available bandwidth will be reserved to address disruptive data gaps with FEC.

- Long-term reference frames: Normally in video coding, the difference between two frames is encoded. This means that on the decoder side we reconstruct the current video frame using the previous frame as a reference. If a frame can’t be decoded due to network loss, then all future frames won’t be decodable as well. To mitigate this issue, “long-term reference frames” are introduced to ensure the decoder can continue even if the previous frame was lost. Those long-term reference frames are more heavily protected against network loss and require more bandwidth to encode.

- Retransmission: Neither FEC nor reference frames can protect against extreme cases of burst network loss. In such scenarios, the receiving client requests a retransmission of the lost packets from the sending client. This approach helps us recover lost video frames without the necessity of retransmitting the entire frame.

The real challenge is in balancing these three approaches to minimize the bandwidth spent protecting against network loss and maximizing the bandwidth used to encode the source content. To achieve the right trade-off, we use data-driven insights from our telemetry on network characteristics and their impact to perceived quality by the end user. Two videos below show how the combinations of these approaches allow Teams to maintain great quality video even when the burst network loss increases from 5% to 10%.

Video (750kbps, 5% burst loss)

Video (750kbps, 10% burst loss)

If you couldn’t detect a difference in video quality between these examples of packet loss, that’s the point. By combining packet loss protection and AI-based content classification and rate control, Teams is able to deliver quality video and screen sharing even amidst network challenges.

In the not-so-distant past, presenters used video and screen sharing sparingly due to network constraints. Today, AI-driven optimizations allow Teams users to realize the benefits of video without the worry of poor bandwidth detracting from their message.

Stay tuned to this blog to learn more about Teams audio and video optimization features and improvements designed to help you get the most from your meetings and calls.

Source link